OMMDB: Open Multimodal Deep Breathing dataset

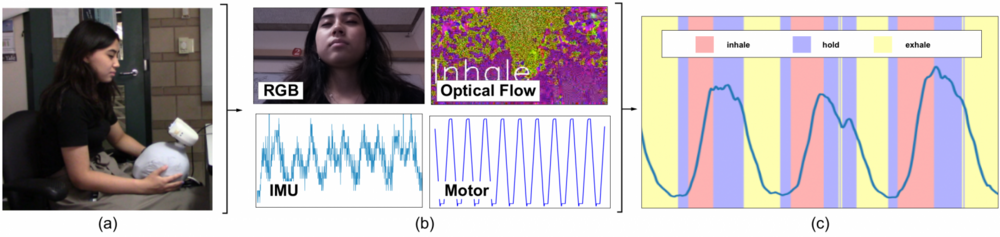

A visualization of deep breathing phase classification with a robot using OMMDB. (a) A participant deep breathing with an Ommie robot during data collection. (b) Visual examples of multimodal data from the robot’s sensors. (c) Deep breathing phase predictions for inhales, holds, and exhales.

OMMDB is an open dataset for researchers developing machine learning methods for deep breathing phase detection and recognition. The dataset consists of 47 young adults performing deep breathing with an Ommie robot in multiple conditions of robot ego-motion and breathing cadences. Three data streams from the robot are included: RGB video, inertial sensor, and motor encoder data. Groundtruth data on breathing is provided via force measurements from a respiration belt annotated labels of breathing phases based on these signals. Further information on this dataset available upon manuscript publication in Fall 2023.

For access to OMMDB, please reach out to one of the following: kayla.matheus@yale.edu, ellie.mamantov@yale.edu, brian.scassellati@yale.edu, marynel.vazquez@yale.edu

For more information about the Ommie robot that this dataset was collected with, please see: https://scazlab.yale.edu/ommie-robot

Publication Reference:

K. Matheus, E. Mamantov, M. Vázquez, B. Scassellati (2023). Deep Breathing Phase Classification with a Social Robot for Mental Health. In International Conference on Multimodal Interaction (ICMI ’23), October 9–13, 2023, Paris, France. ACM, New York, NY, USA, 10 pages. https://doi.org/10.1145/3577190.3614173 [HTML][PDF][POSTER]