Nonverbal Communication for Human-Robot Interaction: Henny Admoni

Robotics has already improved lives by taking over dull, dirty, and dangerous jobs, freeing people for safer, more skillful pursuits. For instance, autonomous mechanical arms weld cars in factories, and autonomous vacuum cleaners keep floors clean in millions of homes. However, most currently deployed robotic devices operate primarily without human interaction, and are typically incapable of understanding natural human communication.

As robotic hardware costs decrease and computational power increases, robotics research is moving from these autonomous but isolated systems to individualized personal robots. Robots at home can help elderly or disabled users with daily tasks, such as preparing a meal or getting dressed, which can increase independence and quality of life. Robots for manufacturing can act as intelligent third hands, improving efficiency and job safety for workers. Robot tutors can provide students with one-on-one, personalized lessons to augment their classroom time. Robot therapy assistants can act as social conduits between those with social impairments, such as autism, and their caretakers or therapists.

To be good social partners, robots must understand and use existing human communication structures. While verbal communication tends to dominate human interactions, nonverbal communication, such as gestures and eye gaze, augments and extends spoken communication. Nonverbal communication happens bi-directionally in an interaction, so personal robots must be able to both recognize and generate nonverbal behaviors. The behaviors to be selected are extremely dependent on context, with different types of behaviors accomplishing different communicative goals like explaining information or managing conversational turn-taking. To be effective in the real world, this nonverbal awareness must occur in real time in dynamic, unstructured interactions.

My research focuses on developing bidirectional, context aware, real time nonverbal behaviors for personally assistive robots. Developing effective nonverbal communication for robots engages a number of disciplines including autonomous control, machine learning, computer vision, design, and cognitive psychology. All of my work is driven by the vision of designing robots that make people’s lives better through greater independence, education, and workplace efficiency.

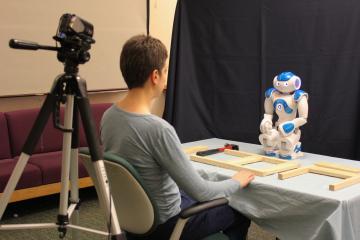

To understand human perceptions of robots, I have conducted a series of lab-based experiments. These carefully controlled studies untangle the nuances of human perception. In one study, I show that robot faces (both anthropomorphic and abstract ones) are cognitively processed more like symbols, such as arrows, than as social elements like human faces (Admoni et al., 2011). In another study, I manipulated the type of user-directed gaze performed by a robot, and showed that short, frequent glances are better for conveying attention than longer, more infrequent stares (Admoni et al., 2013). In a third study, I manipulated the smoothness of an object handover from robot to human by introducing a slight delay in the handover action. This seemingly small change led to dramatic differences in the way the robot’s eye gaze was interpreted, from a seemingly unimportant stimulus to a relevant social cue (Admoni et al., 2014). I also showed that conflicts between what a robot says and the nonverbal behavior it performs can be easily resolved by users, providing support for the benefit of nonverbal communication even when that communication is not perfect (Admoni et al., 2014b).

It is important to know how robot behaviors are perceived, but we must also understand the characteristics of human communication, particularly as they relate to the context of the interaction. In this research, context is the purpose or intention behind a communicative act. Different contexts require different responses from the robot. For example, a “spatial reference” context might involve attending to what object is being referenced, while a “fact-conveying” context might involve recording and acknowledging what is being spoken. Using machine learning, I trained a model for human nonverbal behavior based on annotated observations of human-human interactions in a collaborative spatial task (Admoni et al., 2014c). The model can successfully predict the context of a person’s communication based on their nonverbal behaviors. It can also generate nonverbal robot behaviors given a desired context. This allows robots to not only communicate through nonverbal behaviors, but also understand a human user’s communication. This bidirectionality is a critical part of social interaction: without recognition, a robot may produce the right kinds of nonverbal behaviors, but is essentially blind to human communication; without generation, the robot is inefficient and potentially unclear.

Offline machine learning is useful for modeling human behavior, but assistive robots need to understand and generate communication in real time. To address this, I am developing a robot behavior controller that selects nonverbal behaviors to communicate object references in spatially collaborative tasks. Critically, the controller relies only on perceived components of the scene, rather than offline annotation of data, to pick appropriate behaviors. This allows it to operate in real time. Scene processing is inspired by a psychological understanding of human cognition. It takes into account both low level cues, such as visual object saliency, and high level cues, such as verbal references. In video-based tests with human users, the controller effectively selects the nonverbal behavior that best disambiguates object references in several scenarios.